Thanks! will look in to that… can I ask things here even that they are not of the models used in mozilla?

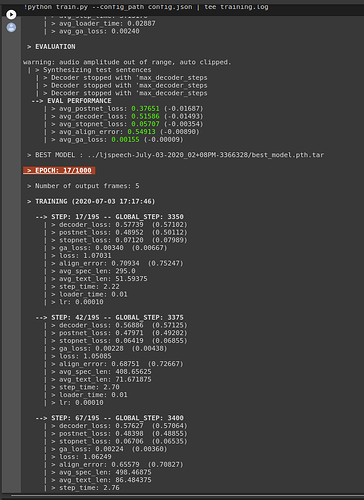

Anyway, I tried to run on my computer (I have a 2080 with 8Gb RAM) got this on first step of the 1000, it is a OOM, is there a way I can train it with a parameter?

--> STEP: 149/195 -- GLOBAL_STEP: 150

| > decoder_loss: 1.54959 (2.75099)

| > postnet_loss: 1.65185 (3.31417)

| > stopnet_loss: 0.33840 (0.53356)

| > ga_loss: 0.02369 (0.04075)

| > loss: 3.22514

| > align_error: 0.99233 (0.99067)

| > avg_spec_len: 705.203125

| > avg_text_len: 126.171875

| > step_time: 1.02

| > loader_time: 0.01

| > lr: 0.00010

! Run is removed from ../ljspeech-July-03-2020_03+56PM-3366328

Traceback (most recent call last):

File "train.py", line 676, in

main(args)

File "train.py", line 591, in main

global_step, epoch)

File "train.py", line 191, in train

loss_dict['loss'].backward()

File "/home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/tensor.py", line 198, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph)

File "/home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/autograd/__init__.py", line 100, in backward

allow_unreachable=True) # allow_unreachable flag

RuntimeError: CUDA out of memory. Tried to allocate 110.00 MiB (GPU 0; 7.79 GiB total capacity; 4.37 GiB already allocated; 116.88 MiB free; 4.64 GiB reserved in total by PyTorch) (malloc at /opt/conda/conda-bld/pytorch_1587428398394/work/c10/cuda/CUDACachingAllocator.cpp:289)

frame #0: c10::Error::Error(c10::SourceLocation, std::string const&) + 0x4e (0x7f1127c94b5e in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libc10.so)

frame #1: + 0x1f39d (0x7f1127a5639d in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libc10_cuda.so)

frame #2: + 0x2058e (0x7f1127a5758e in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libc10_cuda.so)

frame #3: at::native::empty_cuda(c10::ArrayRef, c10::TensorOptions const&, c10::optional) + 0x291 (0x7f112a9ed461 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #4: + 0xddcb6b (0x7f1128c9db6b in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #5: + 0xe26457 (0x7f1128ce7457 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #6: + 0xdd3999 (0x7f114fc49999 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #7: + 0xdd3cd7 (0x7f114fc49cd7 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #8: + 0xd77a7e (0x7f1128c38a7e in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #9: + 0xd7a543 (0x7f1128c3b543 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #10: at::native::cudnn_convolution_backward_input(c10::ArrayRef, at::Tensor const&, at::Tensor const&, c10::ArrayRef, c10::ArrayRef, c10::ArrayRef, long, bool, bool) + 0xb2 (0x7f1128c3bd82 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #11: + 0xde18a0 (0x7f1128ca28a0 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #12: + 0xe26138 (0x7f1128ce7138 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #13: at::native::cudnn_convolution_backward(at::Tensor const&, at::Tensor const&, at::Tensor const&, c10::ArrayRef, c10::ArrayRef, c10::ArrayRef, long, bool, bool, std::array) + 0x4fa (0x7f1128c3d41a in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #14: + 0xde1bcb (0x7f1128ca2bcb in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #15: + 0xe26194 (0x7f1128ce7194 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #16: + 0x29defc6 (0x7f1151854fc6 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #17: + 0x2a2ea54 (0x7f11518a4a54 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #18: torch::autograd::generated::CudnnConvolutionBackward::apply(std::vector >&&) + 0x378 (0x7f115146cf28 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #19: + 0x2ae8215 (0x7f115195e215 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #20: torch::autograd::Engine::evaluate_function(std::shared_ptr&, torch::autograd::Node*, torch::autograd::InputBuffer&) + 0x16f3 (0x7f115195b513 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #21: torch::autograd::Engine::thread_main(std::shared_ptr const&, bool) + 0x3d2 (0x7f115195c2f2 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #22: torch::autograd::Engine::thread_init(int) + 0x39 (0x7f1151954969 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #23: torch::autograd::python::PythonEngine::thread_init(int) + 0x38 (0x7f1154c9b558 in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/libtorch_python.so)

frame #24: + 0xc819d (0x7f115770319d in /home/tyoc213/miniconda3/envs/fastai2/lib/python3.7/site-packages/torch/lib/../../../.././libstdc++.so.6)

frame #25: + 0x76db (0x7f1175e296db in /lib/x86_64-linux-gnu/libpthread.so.0)

frame #26: clone + 0x3f (0x7f1175b5288f in /lib/x86_64-linux-gnu/libc.so.6)

![]() .

.