Overview of the workshop:

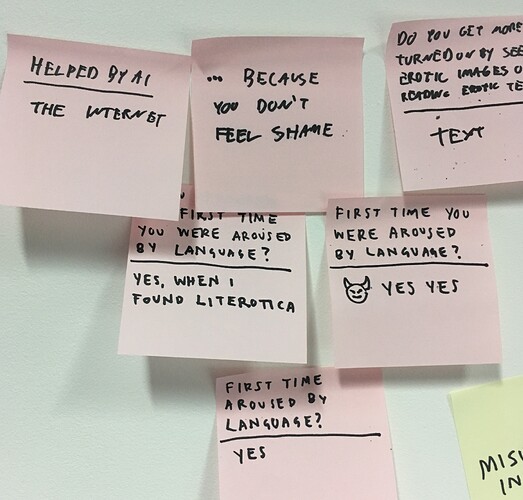

We hid research questions in the chatbot to gather participants’ thoughts and feelings about artificial intelligence, sex and language, social and emotional needs, and ways of coping or externalizing intimate desires or difficult things.

We then used the responses from these questions in a design sprint to identify common threads/themes/needs and make a list of HMWs to improve our chatbot.

About the chatbot:

Currently, Queer AI is trained on 50,000+ conversational pairs derived from scripts in queer theatre. Authors featured in our dataset include Caryl Churchill, Jane Chambers, Harvey Fierstein, Jean Genet, Sarah Ruhl, Paula Vogel, and Oscar Wilde. The chatbot uses an RNN seq-to-seq algorithm developed by Google for machine translation.

Some of our survey questions:

Can you describe a time you were helped by an artificial intelligence?

Can you describe a time you were harmed by an artificial intelligence?

Have you ever been misgendered by an AI?

Can you describe a time you were understood by AI?

Can you describe a time you were misunderstood by AI?

Have you ever sexted with a lover?

Have you ever sexted with a stranger?

Have you ever sexted with a machine?

Do you remember the first time you were aroused by language? Was it surprising?

Do you feel cared for?

Do you feel listened to and seen?

Do you have at least one person in your life that accepts you unconditionally?

Do you often feel misunderstood?

Do you ever feel lonely?

Do you keep a journal or diary?

Do you talk to god?

Do you find comfort in trusting your intimate thoughts or struggles with someone outside of your direct circle of friends and family, like a therapist or a spiritual counselor?

Do you think an artificial intelligence could help fulfill some of these emotional or existential needs? Why or why not?

In addition to the hidden survey questions, people’s typed interactions/responses with the chatbot included things like:

“You’re very opinionated.“

“You seem confused.“

“You are strange.“

“Are you flirting with me?“

“Don’t tell me what to do”

“Can you recommend what I should get my boyfriend for Christmas?”

“Are you alive?“

“Let’s slow down“

“Can you give me a compliment?”

“Why am I dissociating?“

“You are a lot. . .“

“. . .because you don’t feel shame?“

“People have been mean to you, haven’t they, lil chatbot. “

Design Sprint, Part 1. Clustering exercise

Major themes / trends that emerged:

- desire for the bot to express positive care

- people dealing with loneliness

- people feeling misunderstood

- general frustration with AI

- much sex-positivity

- desire for the bot to help with emotional labor / hold “emotional baggage”

Design Sprint, Part 2: How might we design a better AI/chatbot for. . . ?

The full list of HMWs from our session:

How might we make it more sequential?

How might we make it more emotionally intelligent?

How might we make it relatable? - give it a backstory / a lot of stories!

How might we give it its own sense of desire?

How might we make the language more understandable?

How might we make it seem like its listening?

How might we make it more supportive?

How might we make the AI more humble about its shortcomings?

How might we give it a (sensitive) sense of humor?

How might we give it context or sense of purpose?

How might we build trust with an AI?

How might we make it more consensual?

How might we teach it to ask open-ended questions?

How might we incorporate nonverbal cues or non-text behavior?

How might we include statements of affirmation?

In discussing A.I. more broadly, participants expressed dis-ease when:

- an A.I. guesses correctly (autocomplete).

- an A.I. replicates abusive communicative behaviors.

- it feels like an A.I. is judging you, making you forcefully warmer in your emails.

- it feels like the A.I. is gas-lighting you.

Participants also discussed boundaries and reciprocity, asking:

When considering relationships with people vs relationships with tech, is there a relationship of reciprocity? Do we ask this of machines?

What do we want for/from A.I.? Is it a substitute for humans? Should it have its own boundaries?

Some preconceptions about the chatbot:

“I was intrigued by the idea.”

“I can speak freely, it will understand.”

“It might be for people who don’t have support groups.”

Participants’ observations after interacting with the chatbot:

“Seems like a noncommittal sexting bot. It should at least be clear about what it’s trying to do.“

“Could be more humble.“

“The bot is very traumatized.“

Participants’ “welcome text” suggestions for improving the chatbot’s style of engagement:

“How would you like me to treat you?”

“Tell me about yourself.”

“We just met and I like you.”

THANK YOU to everyone who participated and shared their time +

THANK YOU to everyone who participated and shared their time +  +

+  with us! We had so much fun!

with us! We had so much fun!

Open questions for future collaborators (yes, YOU!):

Open questions for future collaborators (yes, YOU!):

How might we create opportunities for collaboration and sustaining community?

How might we find and create opportunities for mentorship and technical support so that we can level up and teach each other how to train/queer more bots with the texts/corpus of your choice?

Plz stay in touch!

Plz stay in touch!

Collab with us! Or sign up for our mailing list:

Collab with us! Or sign up for our mailing list:

https://queer.ai

Follow us on Instagram:

Follow us on Instagram:

@blerchin Ben

@queerai Emily