@karthikeyank sir. i think no need to build new LM. it will adapt for ds-lm.

so only fine tuning the acoustic model will give better results right…

Yes.

But your words must be in the lm (it should be the case)

okay in that case, how can i add my corpus words to the existing lm. so that i can get the existing knowledge base as well as the new word’s knowledge… is there any way for that…

Yep.

Download the complete vocabulary file of the last ds model,

Add your own sentences, build LM.

Yep.

Download the complete vocabulary file of the last ds model,

Add your own sentences, build LM.

But, are you sure that your words aren t in the model ??

An easy way : record the needed sentences, with a good online us text to speech,

Convert it to 16k mono, and test the model…

I did it for some tests, and it works perfectly.

Hope it Will help

okay i will try. can you please share the link where i can get the vocabulary file of the last model if you know.

thanks

hi @elpimous_robot, if you dont mind can you please explain the below lines…

--early_stop True --earlystop_nsteps 6 --estop_mean_thresh 0.1 --estop_std_thresh 0.1 --dropout_rate 0.22

Hello.

Early stop and its params are used to limit the overfitting.

Dropout_rate too.

Perhaps could you investigate on tensorflow learning params.

Have a nice day.

Vincent

yeah … Thank you…

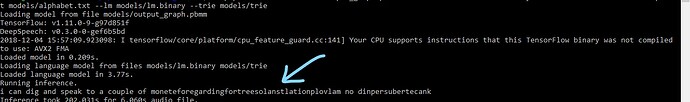

Hi, I am using DeepSpeech 0.4.1 for developing Urdu Language ASR using Deepspeech.

I developed the language model, data is prepared, wrote the alphabets in alphabets.txt as per given guideline in this post.

Now I am trying to generate trie file. Bur I am having this error.

ERROR: VectorFst::Write: Write failed:

Please help. Thank you so much!

Could you give a bit more info on how you’ve attempted to generate the trie? For example the command line and arguments you ran.

/home/rc/Desktop/0.4.1/DeepSpeech-master/native-client-U/generate_trie //home/rc/Desktop/0.4.1/DeepSpeech-master/data/alphabet.txt //home/rc/Desktop/0.4.1/DeepSpeech-master/data/lm/lm.binary //home/rc/Desktop/0.4.1/DeepSpeech/data/trie

I am following this tutorial to generate trie file.

One thing I changed was to bump the n_hidden size up to an even number (1024, based of issue #1241’s results). The first time I ran my model with an odd number returned a warning and WER wasn’t great:

“BlockLSTMOp is inefficient when both batch_size and input_size are odd. You are using: batch_size=1, input_size=375”

How to create lm/trie ?

Hi @yogesha,

Your first post… perhaps you could start with a simple "hello…"

Welcome in this Discourse section.

Have a look at your Deepspeech directory :

Deepspeech/bin/lm

Under, in files, you’ll have the commands to lm creation.

You ll need too to install kenlm files ! (See beginning tuto)

Have a nice day YogeshA.

Hi, Thanks to you tutorial, have a litle question, how can compile this? I am not an expert in this topic

Hi.

Yes you need to compile kenlm libs…

For a deepspeech/native client compilation, if needed, see the readme.md in native client.

That is indeed very informative in terms of coding up the robots